BRINGING GENAI TO YOUR WAREHOUSE

LLM & CORTEX FUNCTIONS

(IN SNOWFLAKE)

In this blog, I’ll explore GEN-AI integration Snowflake offers and walk through how we can use the functionalites that make GEN_AI integration possible, how to consider the cost of using the models and going around the obstacles when it comes to availability of the models in regions.

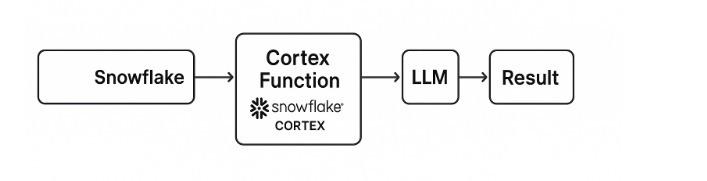

Snowflake establishes GEN-AI integration through the functions under CORTEX schema in Snowflake database. CORTEX is the synonym of what Snowflake refers to GEN-AI integration. The functions under CORTEX schema use LLM (Large Language Model) models, trained by researches in companies such as META, Google, REKA and so on. In addition, there is also Snowflake Arctic which is Snowflake’s own model.

The trained models are hosted and managed by Snowflake. Unless you need customized models, you do not need to train the models again, since they are already trained. If you need customizations on the models, alternatively you can use FINE_TUNING function under CORTEX schema to train them.

WHAT CORTEX FUNCTIONS CAN DO

- Cortex lets you use LLMs like Claude and LLaMA inside Snowflake.

- No model training needed. Just call built-in SQL/Python functions.

- Supports summarization, sentiment, Q&A, translation, and more.

- Costs vary by model and region.

- Secure access with role-based controls and model allowlists.

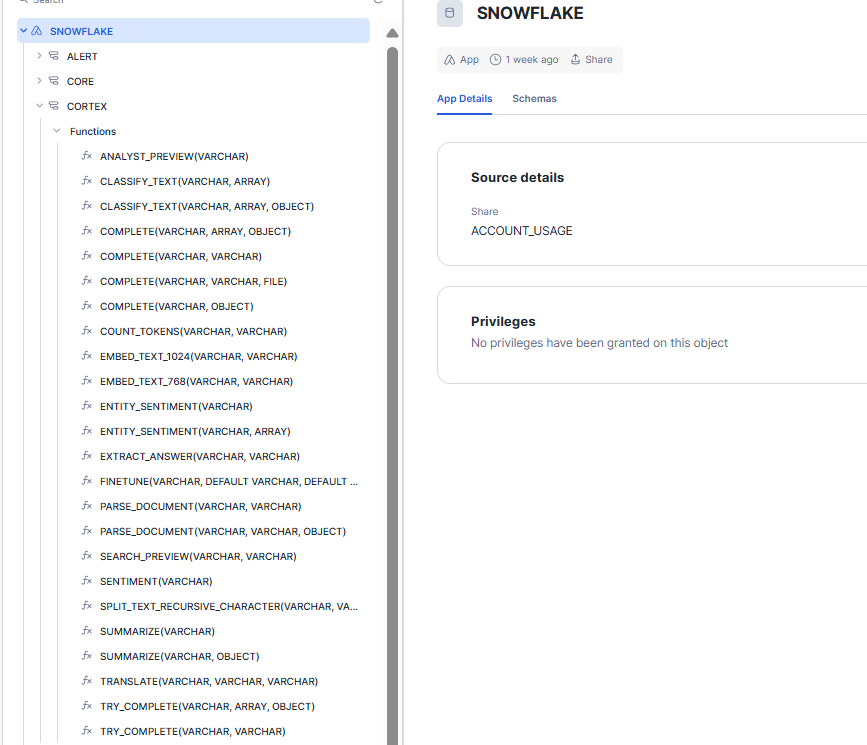

As you can see in the image, there are variety of functions Snowflake offers to establish GEN-AI integration. Some of them are helpers while some of them provide AI analysis. Here are the most used functions that provide AI analysis;

- COMPLETE / TRY_COMPLETE: Depending on the model passed as a parameter to the function, it can review content, compare and classify images, analyse graphs and extract entities from images. COMPLETE and TRY_COMPLETE are basically same functions. The only difference between them are error handling patterns. TRY_COMPLETE returns NULL in case of error since COMPLETE returns an error message.

** Not all models support image inputs. This depends on model capabilities. - SUMMARIZE: Summarizes texts in supported languages.

- SENTIMENT: Extracts customer sentiment and reports whether it is positive, negative or neutral.

- EXTRACT_ANSWER: Extracts information from unstructured data in Q&A format.

- TRANSLATE: Translates texts in supported languages.

- CLASSIFY_TEXT: Classifies the given text and categorizes the subject of the text using the parameters passed to the function.

- PARSE_DOCUMENT: Extracts content from documents.

CORTEX functions can be used either by running SQL statements on Snowsight or calling functions from “snowflake.cortex” package in Python.

The fact that CORTEX functions being available in Python makes it possible to use them for application development.

WHO CAN USE CORTEX FUNCTIONS?

CORTEX_USER is the database role that manages access to the functions under CORTEX schema. It is assigned to PUBLIC role by default. This means everybody can use the CORTEX functions with the models they have access to and available in their region.

CORTEX_USER database role can be granted or revoked by using ACCOUNTADMIN role. By revoking this database role from PUBLIC role, you can remove the default access to CORTEX functions and choose to assign CORTEX_USER database role to designated security roles for more security.

MODELS

As mentioned above, the functions use trained LLM models. These models are available in different regions and varies around their capability and cost of usage. They are divided into 3 categories;

- Large models

High capability, higher cost.

– Claude 3-5 Sonnet

– deepseek-r1, reka-core

– mistral-large, mistral-large2

– llama3.1-405b, snowflake-llama3.1-405b - Medium models

Good balance of performance and cost.

– llama3.1-70b

– snowflake-arctic

– reka-flash, mixtral-8x7b,

– jamba-Instruct, jamba-1.5-mini, jamba-1.5-large - Small models

Lower latenct, budget-friendly but lower capability

– llama3.2-1b / 3b / 8b

– mistral-7b, gemma-7b

When it comes to running LLM Models, batch processing is the recommended way if latency is not an issue. For interactive processes that use mostly Agent APIs or functions such as Complete and Embed; using REST API should be considered.

Model Availability In Terms Of Region

The model availability changes from one region to another. While some models are available in some regions, some models are not. If the model you want to use is not available in your region; CORTEX_ENABLED_CROSS_REGION parameter should be set to the region where the model is available or to all regions as an alternative.

By default CORTEX_ENABLED_CROSS_REGION parameter is disabled. ACCOUNTADMIN role should be used for setting the parameter on the account. Also it is important to underline that cross region availability does not apply to all models.

ALTER ACCOUNT SET CORTEX_ENABLED_CROSS_REGION = 'AWS_US';

ALTER ACCOUNT SET CORTEX_ENABLED_CROSS_REGION = 'ANY_REGION';When using the models that are available in other regions, latency and the cost should be considered. The models are invoiced according to the regions they are available.

(See more details about model availability and the models itself here.)

Understanding Costs

The models are billed per number of tokens used for running the models. The cost of tokens is model-specific and changes from one model to another. As cost comes into play as an important actor, you may want to restrict which models will be used on your Snowflake account and which should not be used.

By using parameter CORTEX_MODELS_ALLOWLIST on account, we can restrict some models as well as allow or restrict all models on the account. Keep in mind that this restriction can be done only for Cortex functions, they are not applied on other GEN-AI components such as Cortex Search, Cortex Agent, Document AI, ext.

ALTER ACCOUNT SET CORTEX_MODELS_ALLOWLIST = 'All';

ALTER ACCOUNT SET CORTEX_MODELS_ALLOWLIST = 'None';

ALTER ACCOUNT SET CORTEX_MODELS_ALLOWLIST = 'mistral-large2,llama3.1-70b';Snowflake Cortex is making it easier than ever to integrate GenAI into data workflows without heavy engineering lift. Whether you’re summarizing documents, analyzing customer sentiment, or building AI-driven apps, Cortex offers a scalable, secure way to bring LLMs to your data. Keep an eye on this space—it’s just getting started.

Leave a Reply